TRI Authors: Kuan-Hui Lee, Matthew Kliemann, Adrien Gaidon, Jie Li, Chao Fang, Sudeep Pillai, Wolfram Burgard

All Authors: KH Lee, M. Kliemann, A. Gaidon, J. Li, C. Fang, S. Pillai, W. Burgard

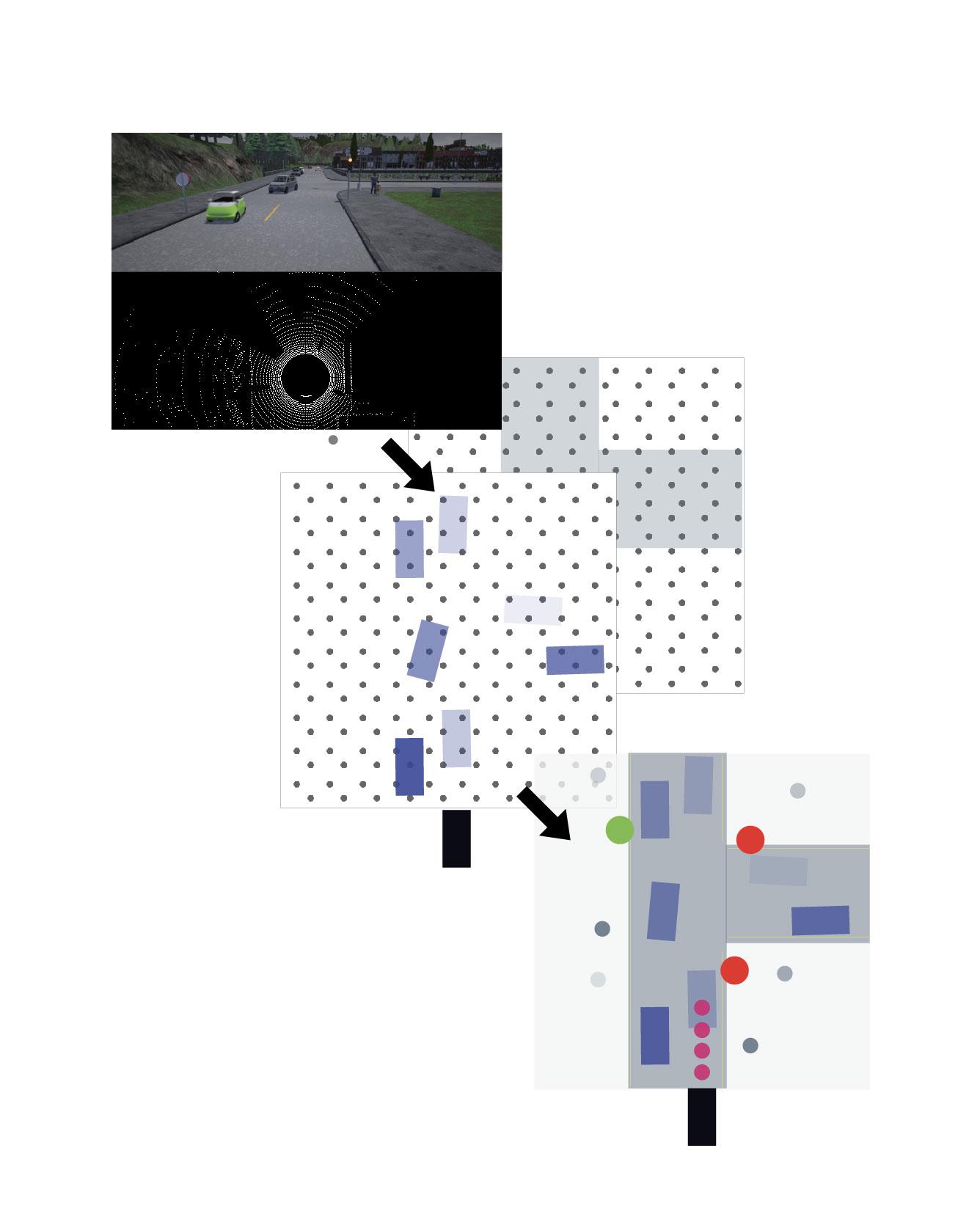

In autonomous driving, accurately estimating the state of surrounding obstacles is critical for safe and robust path planning. However, this perception task is difficult, particularly for generic obstacles/objects, due to appearance and occlusion changes. To tackle this problem, we propose an end-to-end deep learning framework for LIDAR-based flow estimation in bird's eye view (BeV). Our method takes consecutive point cloud pairs as input and produces a 2-D BeV flow grid describing the dynamic state of each cell. The experimental results show that the proposed method not only estimates 2-D BeV flow accurately but also improves tracking performance of both dynamic and static objects. Read More

Citation: Lee, Kuan-Hui, Matthew Kliemann, Adrien Gaidon, Jie Li, Chao Fang, Sudeep Pillai, and Wolfram Burgard. "PillarFlow: End-to-end Birds-eye-view Flow Estimation for Autonomous Driving." arXiv e-prints (2020) To appear in IROS, 2020