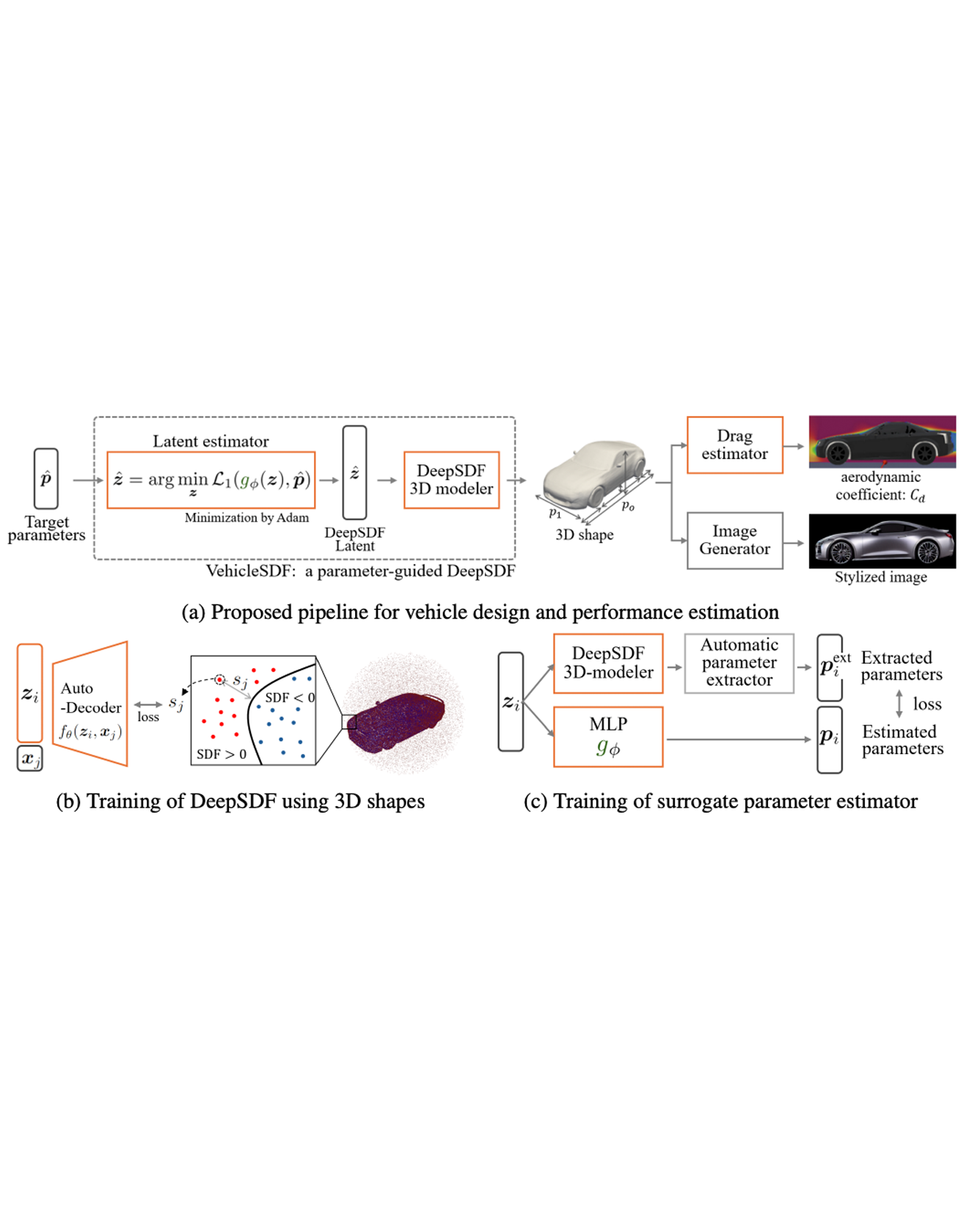

Generative AI models have made significant progress in automating the creation of 3D shapes, which has the potential to transform car design. In engineering design and optimization, evaluating engineering metrics is crucial. To make generative models performance-aware and enable them to create high-performing designs, surrogate modeling of these metrics is necessary. However, the currently used representations of 3D shapes either require extensive computational resources to learn or suffer from significant information loss, which impairs their effectiveness in surrogate modeling. To address this issue, we propose a new 2D representation of 3D shapes. We develop a surrogate drag model based on this representation to verify its effectiveness in predicting 3D car drag. We construct a diverse dataset of 4,535 high-quality 3D car meshes labeled by drag coefficients computed from computational fluid dynamics simulations to train our model. Our experiments demonstrate that our model can accurately and efficiently evaluate drag coefficients with an R^2 value above 0.84 for various car categories. Our model is implemented using deep neural networks, making it compatible with recent AI image generation tools (such as Stable Diffusion) and a significant step towards the automatic generation of drag-optimized car designs. Moreover, we demonstrate a case study using the proposed surrogate model to guide a diffusion-based deep generative model for drag-optimized car body synthesis. We have made the dataset and code publicly available at https://decode.mit.edu/projects/dragprediction/. READ MORE